I re-found the other thing in the Chapter 3 notes that I had trouble wrapping my mind around. In deriving the closed form formula for merge sort, Vassos makes the leap from

T(n) = cn + log2(n)dn (*)

which is defined for all n which are powers of 2, to

T(n) <= klog2(n) (**)

as an upper bound.

now, factoring (*) I can easily obtain T(n) = (c + log2(n)d) n

...... but from there it's a stretch for me to get to (**). What is k?

T(n) = (c + log2(n)d) n = [c/log2(n) + d] log2(n) n

and c >= c/log2(n) so maybe k = c + d and

T(n) = (c + log2(n)d) n = [c/log2(n) + d] log2(n) n <= [c + d] log2(n) n = k log2(n) n ....?

Let's hope the course notes get to it.

--------------------

Actually, it turns out that k is going to be 2c + 4d.

I guess it's one of those things you work out during your proof, and that you can't actually know before.

Thinking back to CSC 165 that totally makes sense. In big theta (or big O or Omega) proofs, we almost always figured out what c and b were going to be as we went along.

Also, in this particular case k turned out that way because he made the step

2cn + 2dn <= 2cn logn2(n) + 2dn log2(n) given that n >=2.

Other steps are definitely also possible, and would have resulted in different k values.

-------------------------------------------

Difficulty 2

-------------------------------------------

now struggling with the conversion of 3.12 to 3.13, as below

I understand that by assuming a special value for n, namely that n is a power of b, we are able to collapse the floor/ceiling terms into the generic fraction. That's not hard to follow. The confusing part for me is the change in domain specification. Why n = 1 and n> 1 now, not 1<= n < b and n <= b ?

------------------------------

got it.

The smallest possible power of b is one. Namely, b^0. All other powers of b are > 1, and therefore the domain of n can be broken up in this way.

In this special domain of n where n can only be a power of b, there is no n s.t. 1 <= n < b other than n = 1. The next value of n, after n = b^0 = 1, is n = b^1 = b, which falls outside of 1<= n < b.

-------------------------------------------

Difficulty 3

-------------------------------------------

not a difficulty, but a discovery.

x^(log(y)) = y(^log(x)) provided the base is shared.

Cool!

Testing it out ...

x = 3

y = 8

base = 2

x^(log(y)) = 3^(3) = 27

y(^log(x)) = [ roughly ] 8 ^ (1.58496)

= [roughly] 26.9999

Very cool!

-------------------------------------------

Difficulty 4

-------------------------------------------

Lower bound part of Master's Theorem -

Why the d requirements?

Strictly positive lambda I understand. We need to avoid the 0 case, which is true but not very meaningful.

-----------------------------

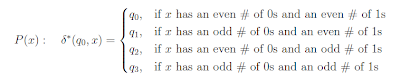

okay so if d = 0, then our derived closed form of

collapses to T(n) = cn^(logb(a)) in both cases [ b/c if a = b^l then logb(a) = l]. So the d = 0 case makes sense, allowing lambda prime to be c.

hmmm..

and then I guess the other cases also follow from this. if d != 0 then it is either <0 or >0. We don't allow it to be < 0 because negative steps doesn't make sense. You can't undo work.

So in this context, if d != 0 then d > 0. And so the d > 0 cases above divide into the two cases outlined by the closed form here.

Actually no, no they don't. They split into three. The a = b^l case is evident. But the a != b^l case divides into into both a < b^l and a > b^l..

--------------------------------------------------------------------------------

Done Chapter 3. Woohoo!